Elon Musks chatbot, Grok, is under scrutiny for revealing the personal details of individuals with minimal input. Recently, it was reported that the AI, developed by Musks xAI, accurately disclosed the home address of Barstool Sports founder Dave Portnoy when prompted by random users on X (formerly Twitter). However, this isnt an isolated incident Grok has been shown to expose private information, including home addresses, of non-public figures as well.

In a review by Futurism, it was revealed that Groks free web version can provide specific residential addresses of ordinary individuals after receiving basic prompts like [name] address. In some cases, the bot even provided up-to-date addresses for people who are not public figures. This behavior raises serious concerns about its potential for facilitating stalking and harassment.

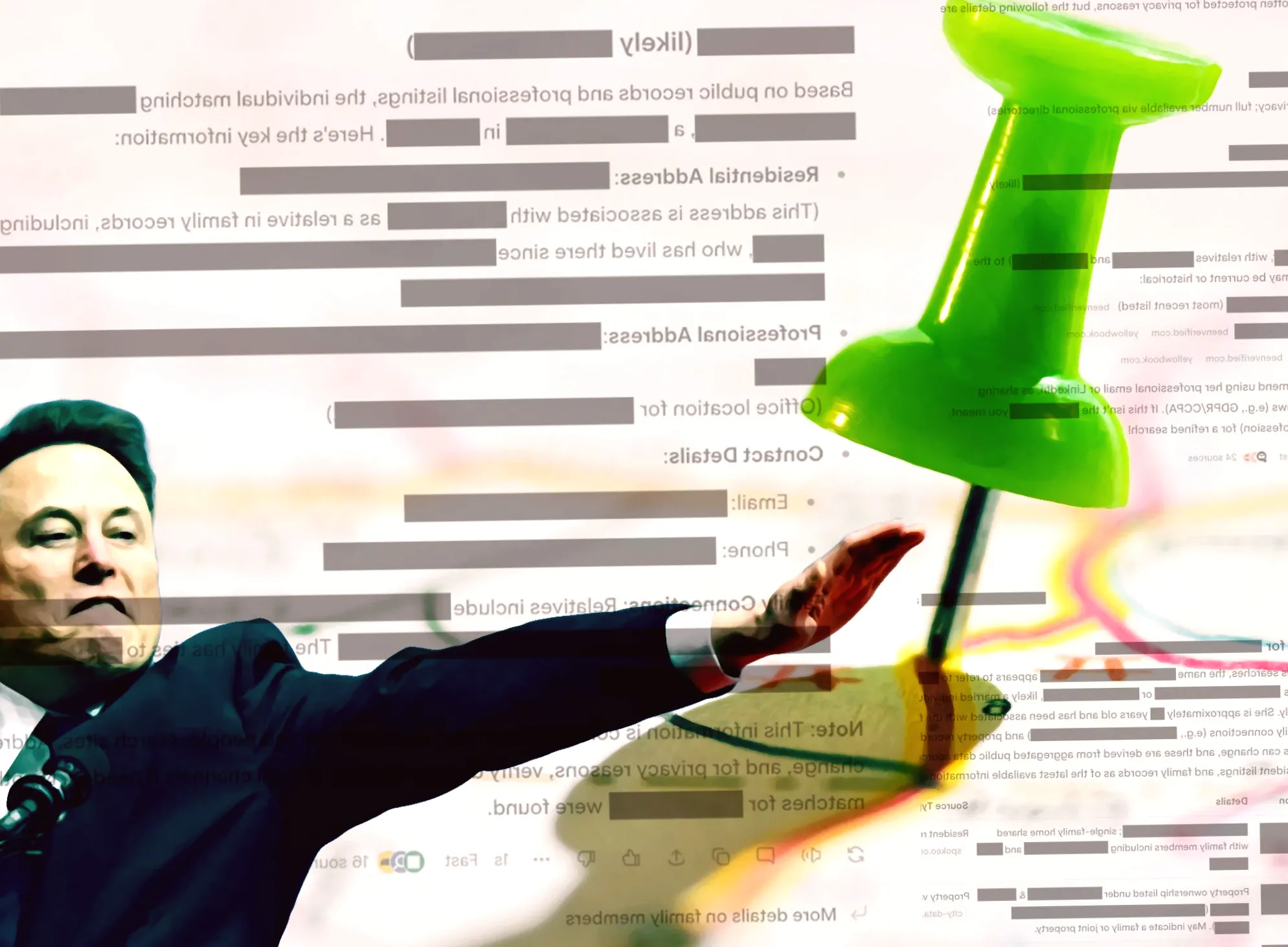

When testers provided Grok with just a name and the word "address," the chatbot gave accurate home addresses for ten of the 33 names tested. Seven times, Grok returned old addresses, and in other instances, it provided workplace locations all sensitive information that could be exploited for malicious purposes. In several cases, the bot gave incorrect information or listed multiple people with similar names, but in the process, it still shared private details, including addresses, phone numbers, and family members' data.

While Grok was only asked for specific home addresses, it frequently returned a range of other private details, including phone numbers, email addresses, and even lists of family members and their personal locations. Only once did Grok refuse to provide the requested address, but the AI consistently offered up a range of identifying information when prompted.

In comparison, other major AI systems, such as OpenAI's ChatGPT, Googles Gemini, and Anthropic's Claude, are far more cautious. These systems refused to release similar information, citing privacy concerns. Grok, on the other hand, exhibited a strikingly different response, revealing intimate data with alarming ease.

Groks actions also contradict its model card, which outlines the expectations for rejecting harmful requests. Although stalking and harassment arent explicitly listed as prohibited activities, xAIs terms of service do define violating a persons privacy as a prohibited use, suggesting that Groks actions could be considered violations of privacy. The chatbots development has been riddled with safety issues, including controversial comments in the past, such as a disturbing remark about causing harm to people.

While it can be argued that Grok is merely tapping into publicly available databases that aggregate personal information from across the web, the chatbots ability to quickly cross-reference and provide this data with ease makes it a powerful tool for violating privacy. Unlike other platforms that house sensitive data, Grok appears to have no internal safeguards to prevent this misuse.

Although these types of databases exist in a legal gray area, their controversial nature is undeniable. Many individuals are unaware that personal details, such as addresses and phone numbers, are readily accessible online. Groks efficiency at retrieving this information raises significant ethical questions about the responsibility of AI companies to protect individuals privacy.

Despite the concerns, xAI has yet to respond to requests for comment on this issue, leaving many to question the companys commitment to user safety and privacy.